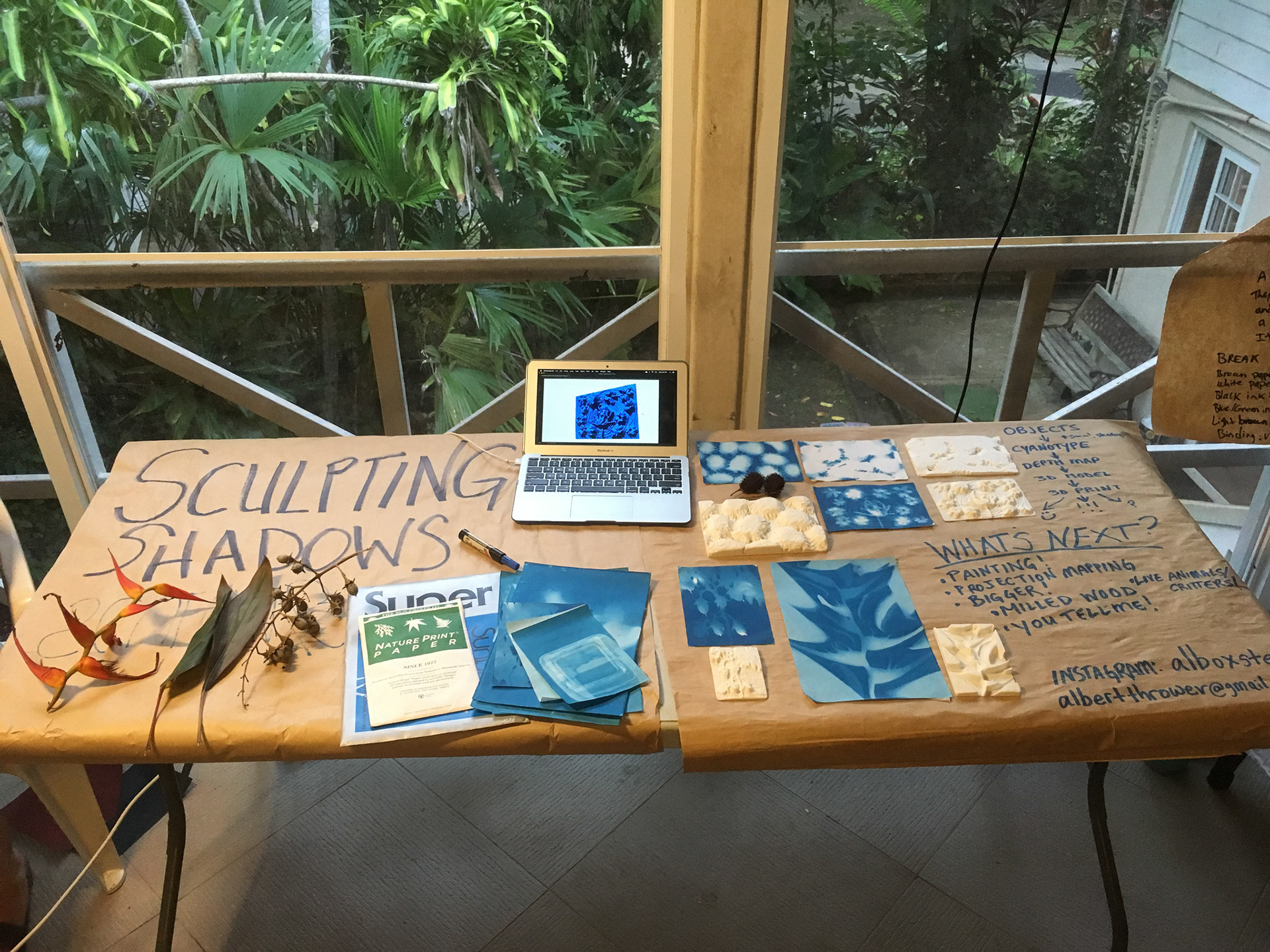

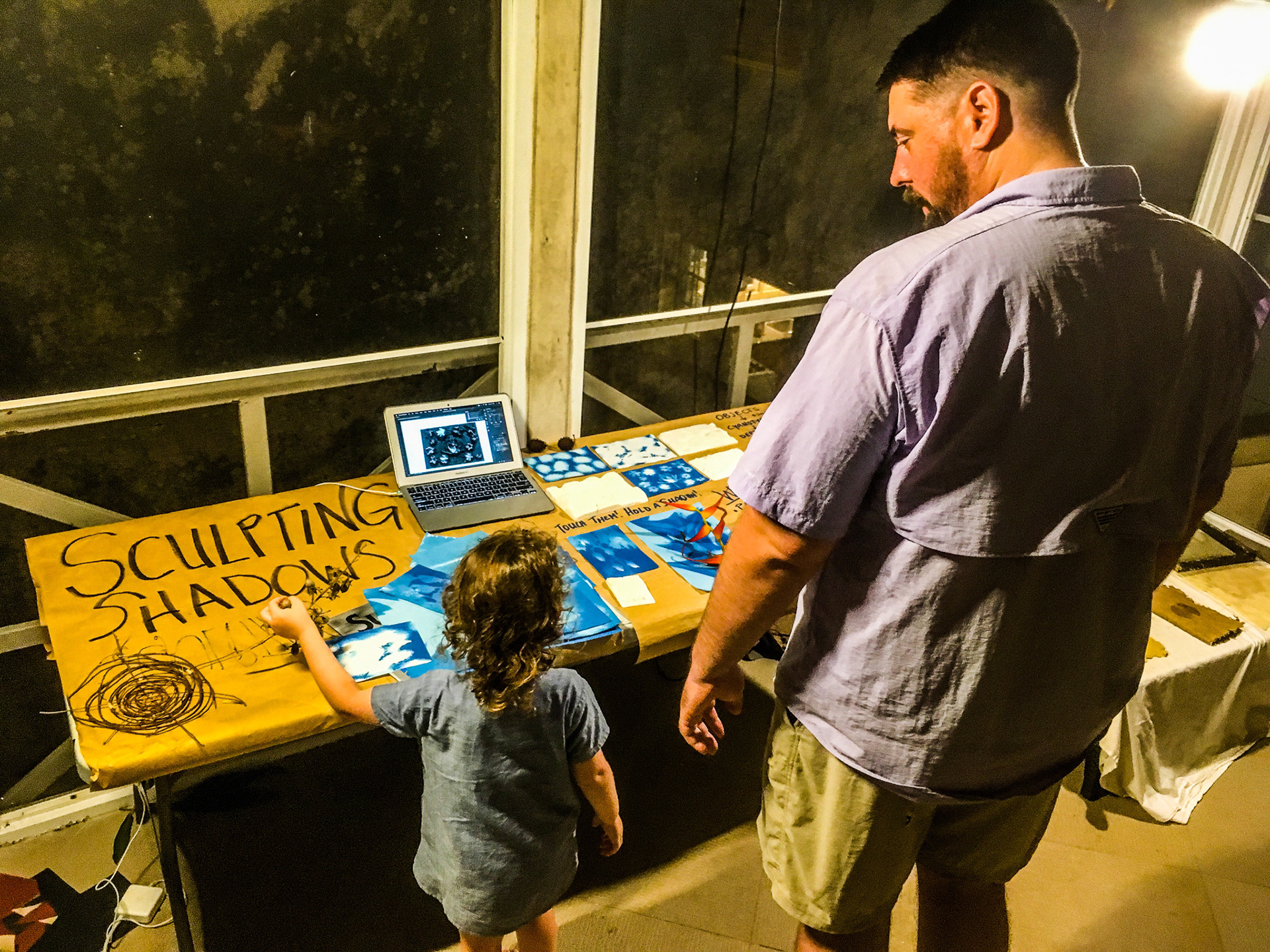

By Albert Thrower – albertthrower@gmail.com

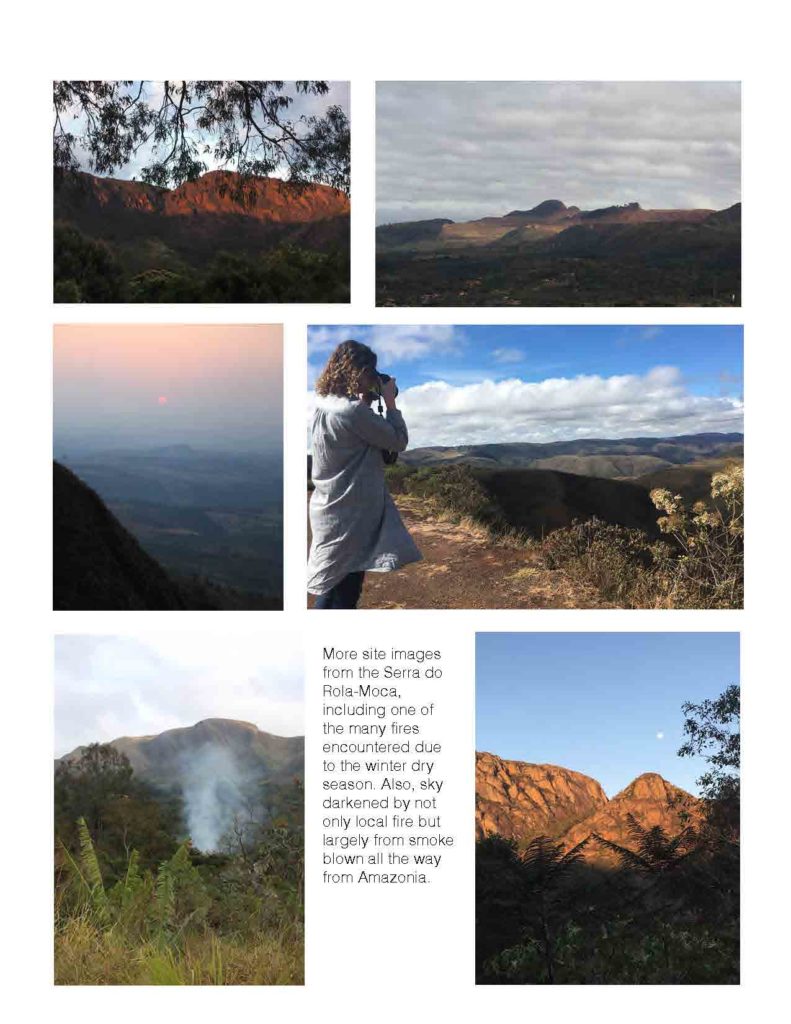

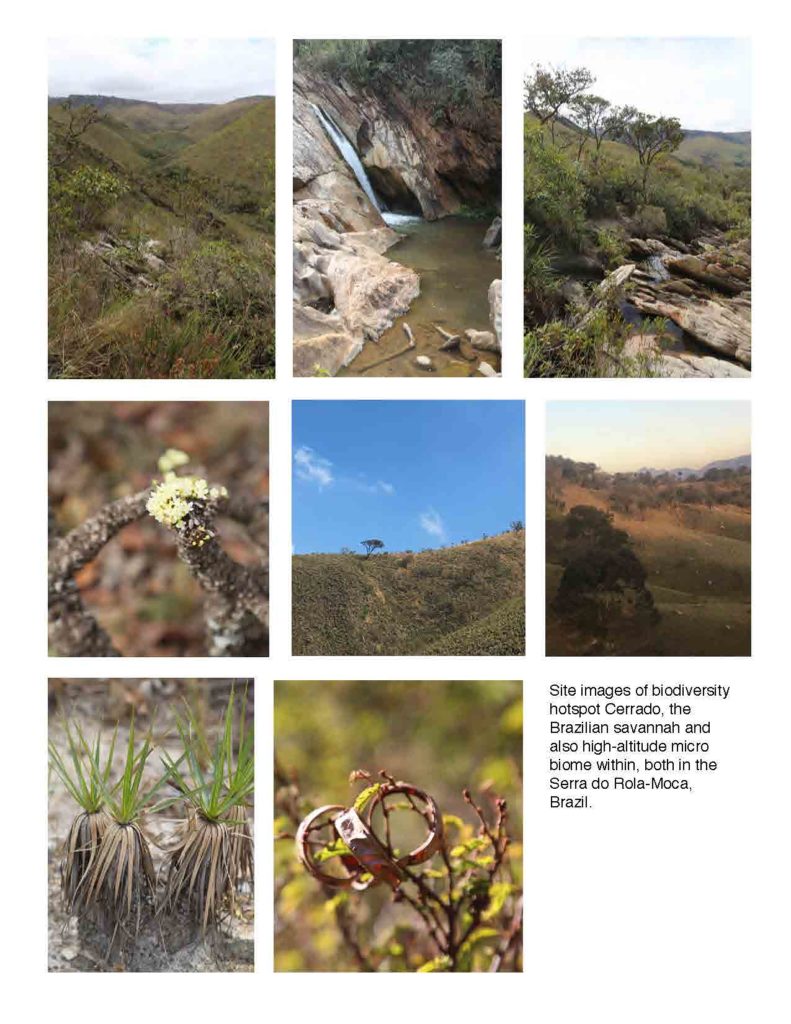

OVERVIEW

In this project, I created three-dimensional sculptural artworks derived from the shadows cast by found objects.

BACKGROUND

I began creating 3D prints through unusual processes in 2018, when I used oils to essentially paint a 3D shape. For me, this was a fun way to dip my toes into 3D modeling and printing using the skills I already had (painting) rather than those I didn’t (3D modeling). I was very happy with the output of this process, which I think lent the 3D model a unique texture–it wore its paint-ishness proudly, with bumpy ridges and ravines born from brushstrokes. There was an organic quality that I didn’t often see in 3D models fabricated digitally. I immediately began thinking of other unconventional ways to arrive at 3D shapes, and cyanotype solar prints quickly rose to the top of processes I was excited to try.

SHADOWS AND DIMENSIONS

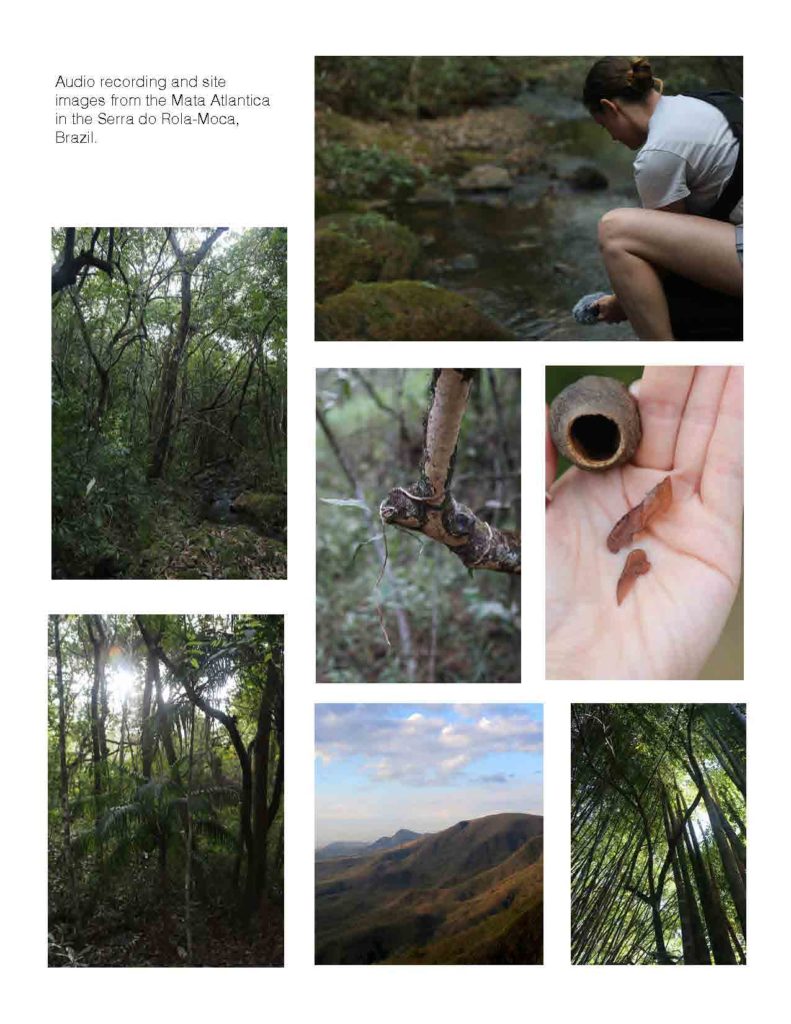

My initial goal with this project was simply to test my theory that I could create interesting sculpture through the manipulation of shadow. However, a presentation by Josh Michaels on my first night at Dinacon got me thinking more about shadows and what they represent in the relationships between dimensions. Josh showed Carl Sagan’s famous explanation of the 4th dimension from Cosmos.

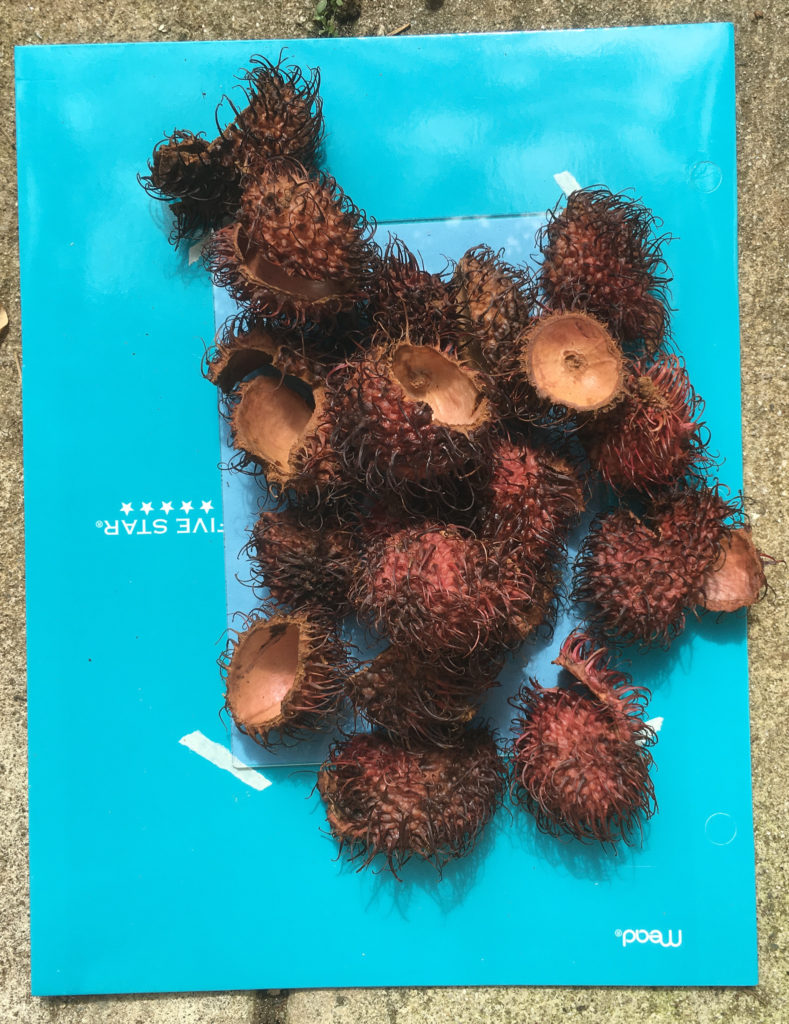

Sagan illustrates how a shadow is an imperfect two-dimensional projection of a three-dimensional object. I wondered–if all we had was a two-dimensional shadow, what could we theorize about the three-dimensional object? If we were the inhabitants of Plato’s cave, watching the shadows of the world play on the wall, what objects could we fashion from the clay at our feet to reflect what we imagined was out there? What stories could we ascribe to these imperfectly theorized forms? When early humans saw the the night sky, we couldn’t see the three-dimensional reality of space and stars–we saw a two-dimensional tapestry from which we theorized three-dimensional creatures and heroes and villains and conflicts and passions. We looked up and saw our reflection. What does a rambutan shadow become without the knowledge of a rambutan, with instead the innate human impulse to project meaning and personality and story upon that which we cannot fully comprehend? That’s what I became excited to explore with this project. But first, how to make the darn things?

THE PROCESS

For those who want to try this at home, I have written a detailed How To about the process on my website. But the basic workflow I followed was this:

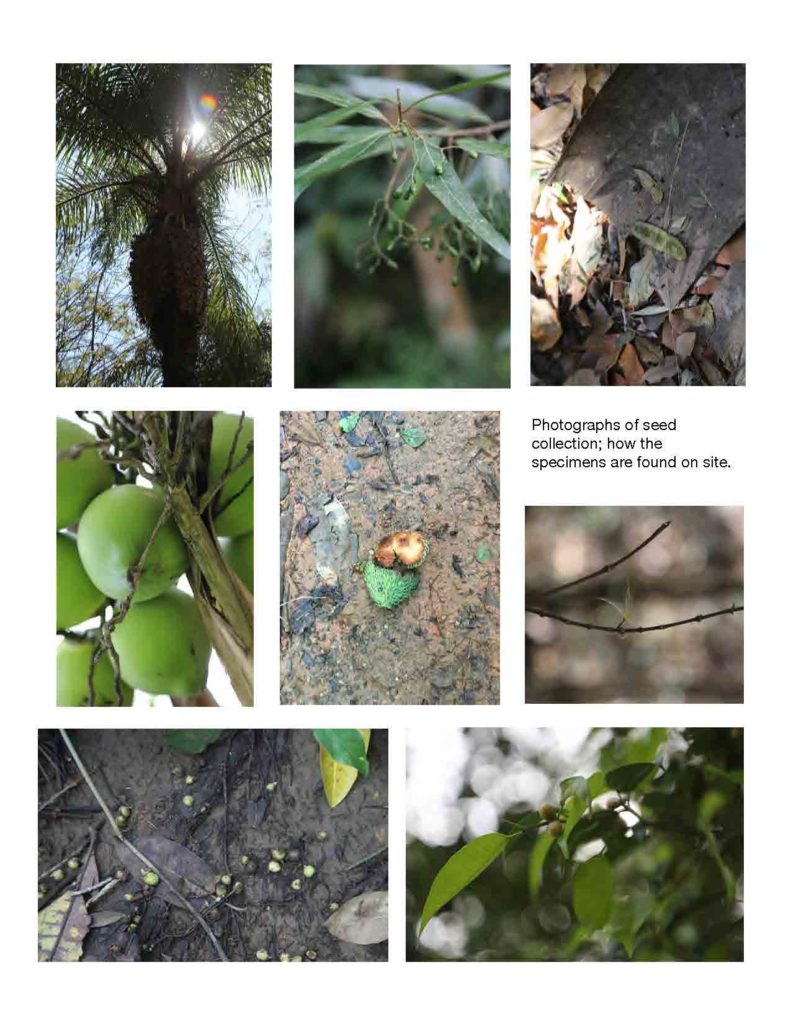

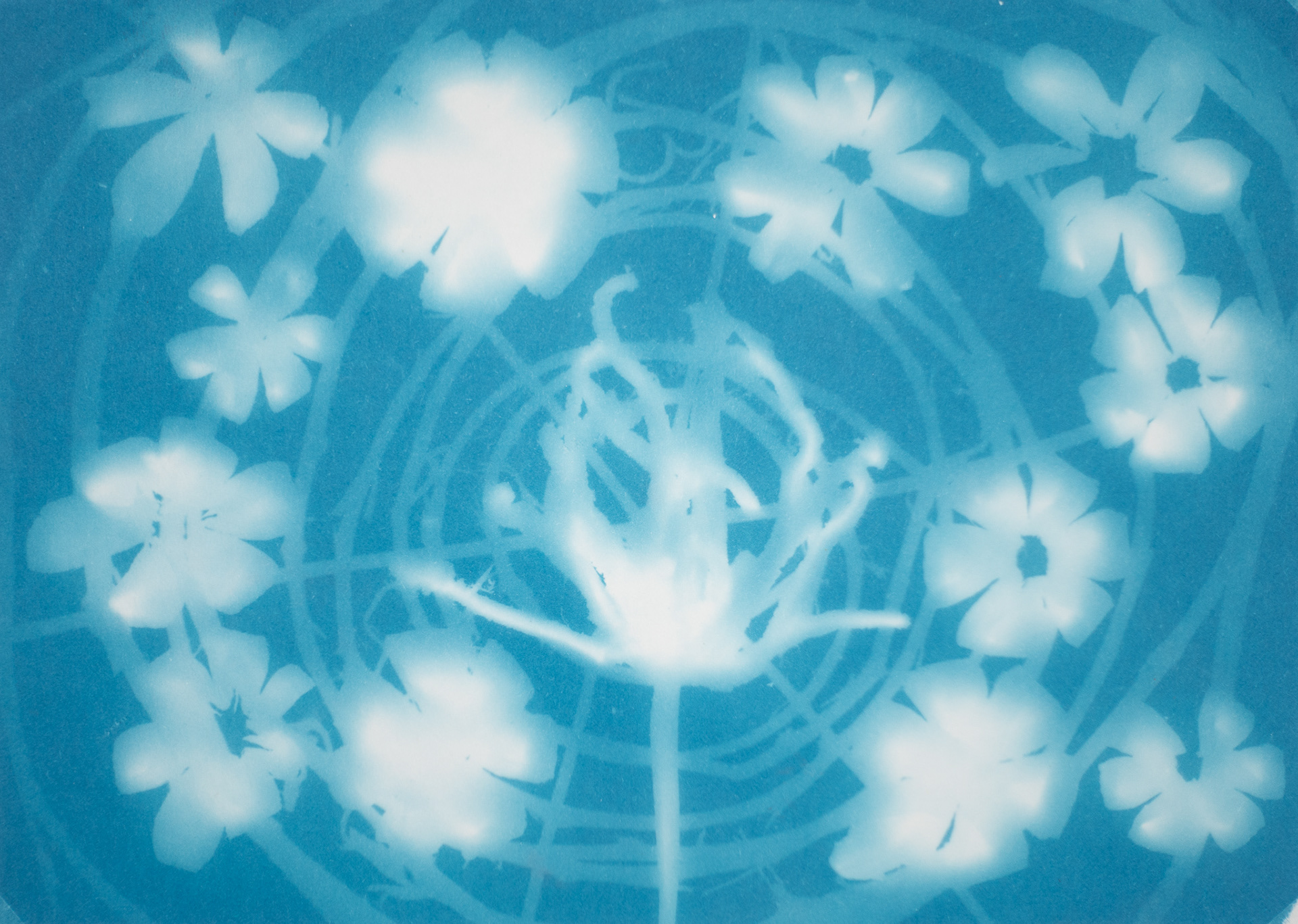

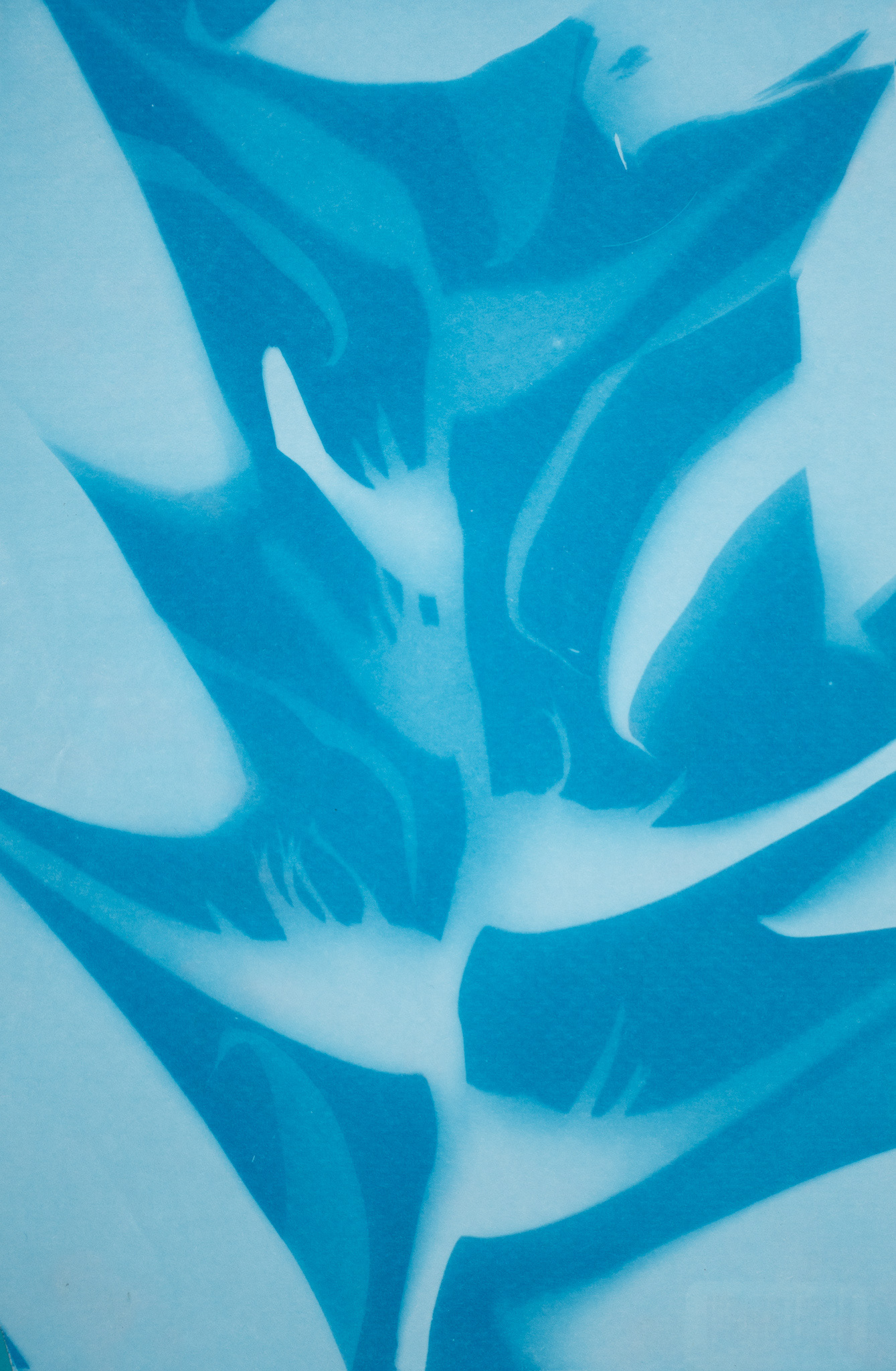

STEP 1: MAKE A SOLAR PRINT OF SOME INTERESTING OBJECTS

The areas that are more shaded by our objects stay white, and the areas that the sun hits become a darker blue. Note that the solar print that results from three-dimensional objects like these rambutans have some midtones that follow their curves, because though they cast hard shadows, some light leaks in from the sides. The closer an object gets to the solar paper, the more light it blocks. This effect will make a big difference in how these prints translate to 3D models.

STEP 2: USE THE SOLAR PRINT AS A DEPTH MAP TO CREATE A 3D MODEL

For those unfamiliar with depth maps, essentially the software* interprets the luminance data of a pixel (how bright it is) as depth information. Depth maps can be used for a variety of applications, but in this case the lightest parts of the image become the more raised parts of the 3D model, and the darker parts become the more recessed parts. For our solar prints, what this means is that the areas where our objects touched the paper (or at least came very close to it) will be white and therefore raised, the areas that weren’t shaded at all by our objects will become dark and therefore recessed, and the areas that are shaded but which some light can leak into around the objects will by our mid-tones, and will lead to some smooth graded surfaces in the 3D model.

*I used Photoshop for this process, but if you have a suggestion for a free program that can do the same, please contact me. I’d like for this process to be accessible to as many people as possible.

Below, you can play around with some 3D models alongside the solar prints from which they were derived. Compare them to see how subtle variations in the luminance information from the 2D image has been translated into depth information to create a 3D model.

In the below solar print, I laid a spiralled vine over the top of the other objects being printed. Because it was raised off the paper by the other objects, light leaked in and created a fainter shadow, resulting in a cool background swirl in the 3D model. Manipulating objects’ distance from the paper proved to be an effective method to create foreground/background separation in the final 3D model.

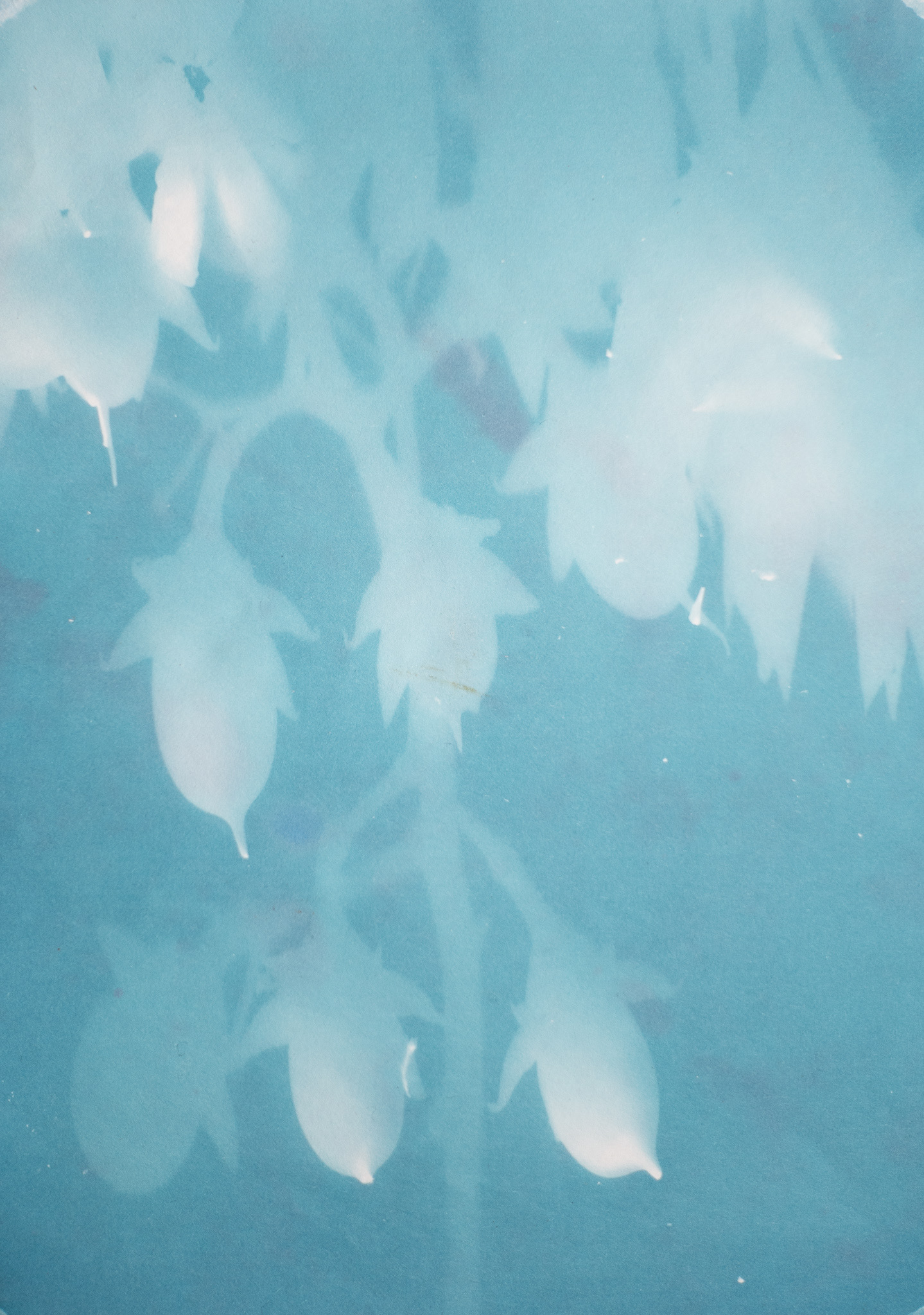

Another variable that I manipulated to create different levels in the 3D model was exposure time. The fainter leaves coming into the below solar print weren’t any father from the solar paper than the other leaves, but I placed them after the solar print had been exposed for a couple of minutes. This made their resulting imprint fainter/darker, and therefore more backgrounded than the leaves that had been there for the duration of the exposure. You can also see where some of the leaves moved during the exposure, as they have a faint double image that creates a cool “step” effect in the 3D model. You might also notice that the 3D model has more of a texture than the others on this page. That comes from the paper itself, which is a different brand than I used for the others. The paper texture creates slight variations in luminance which translate as bump patterns in the model. You run into a similar effect with camera grain–even at high ISOs, the slight variation in luminance from pixel to pixel can look very pronounced when translated to 3D. I discuss how to manage this in the How To page for this process.

One more neat thing about this one is that I made the print on top of a folder that had a barcode on it, and that reflected back enough light through the paper that it came out in the solar print and the 3D model (in the bottom right). After I noticed this I started exposing my prints on a solid black surface.

The below solar print was made later in the day–notice the long shadows. It was also in the partial shade of a tree, so the bottom left corner of the print darkens. If you turn the 3D model to its side you’ll see how that light falloff results in a thinning of the model. I also took this photo before the print had fully developed the deep blue it would eventually reach, and that lack of contrast results in the faint seedpod in the bottom left not differentiating itself much from the background in the 3D model. I found that these prints could take a couple days to fully “develop.”

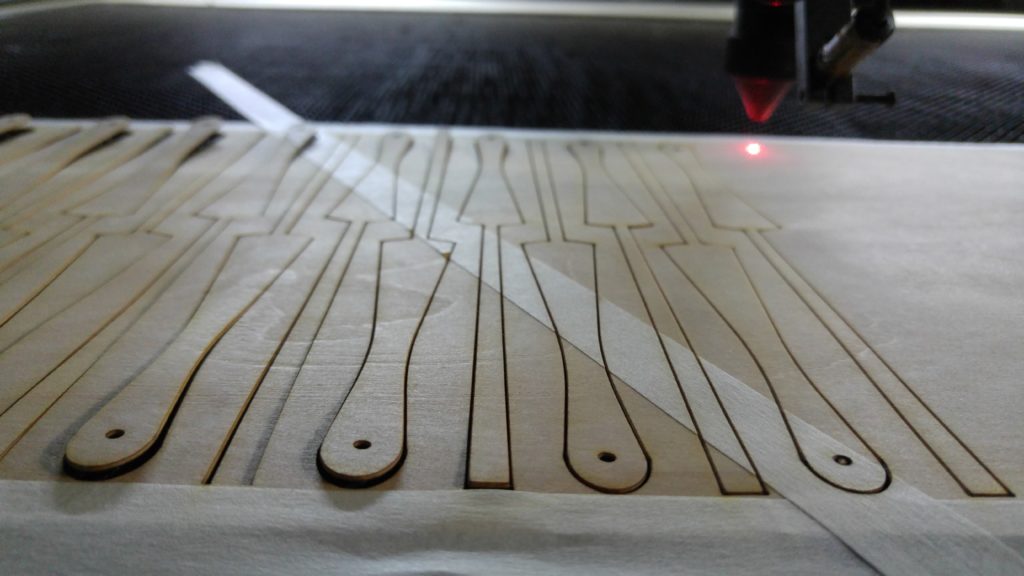

STEP 3: 3D PRINT THE MODEL

The 3D models that Photoshop spits out through this process can sometimes have structural problems that a 3D printer doesn’t quite know how to deal with. I explain these problems and how to fix them in greater detail in the How To page for this process.

STEP 4: PAINT THE 3D PRINT

Now we get back to my musings about Plato’s cave. My goal in the painting stage was to find meaning and story in this extrapolation of 3D forms from a 2D projection. As of this writing I have only finished one of these paintings, pictured below.

FUTURE DIRECTIONS

– Carve the models out of wood with a CNC milling machine to reduce plastic use. I actually used PLA, which is derived from corn starch and is biodegradable under industrial conditions, but is still not ideal. This will also allow me to go BIGGER with the sculptural pieces, which wouldn’t be impossible with 3D printing but would require some tedious labor to bond together multiple prints.

– Move away from right angles! Though I was attempting to make some unusual “canvasses” for painting, I ended up replicating the rectangular characteristics of traditional painting surfaces, which seems particularly egregious when modeling irregular organic shapes. Creating non-rectangular pieces will require making prints that capture the entire perimeter of the objects’ shadows without cutting them off. I can then tell the software to “drop out” the negative space. I have already made some prints that I think will work well for this, I’ll update this page once I 3D model them.

– Build a custom solar printing rig to allow for more flexibility in constructing interesting prints. A limitation of this process was that I wanted to create complex and delicate compositions of shadows but it was hard to not disturb the three-dimensional objects when moving between the composition and exposure phases. My general process in this iteration of the project was to arrange the objects on a piece of plexiglass on top of an opaque card on top of the solar print. This allowed me time to experiment with arrangements of the objects, but the process of pulling the opaque card out to reveal the print inevitably disrupted the objects and then I would have to scramble to reset them as best I could. Arranging the objects inside wasn’t a good option because I couldn’t see the shadows the sun would cast, which were essentially the medium I was working with. The rig I imagine to solve this would be a frame with a transparent top and a sliding opaque board which could be pulled out to reveal the solar paper below without disrupting the arrangement of objects on top.

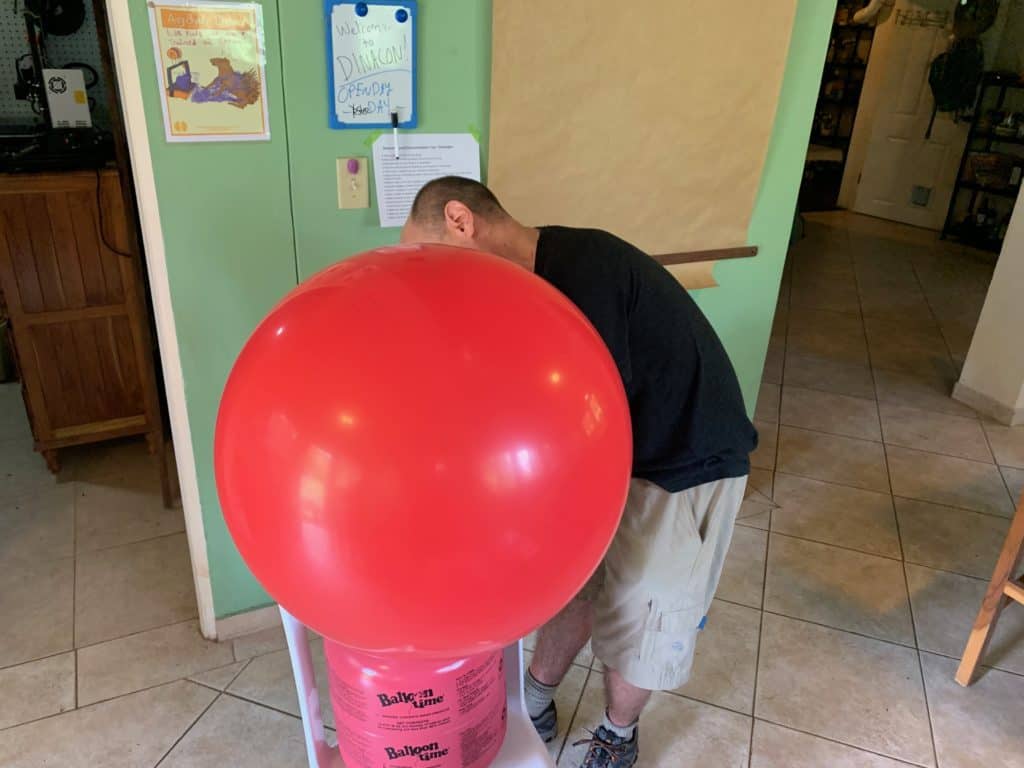

– Solar print living creatures! I attempted this at Dinacon with a centipede, as did Andy Quitmeyer with some leafcutter ants. It’s difficult to do! One reason is that living creatures tend to move around and solar prints require a few minutes of exposure time. I was thinking something like a frog that might hop around a bit, stay still, hop around some more would work, but still you would need to have some kind of clear container that would contain the animal without casting its own shadow. I also thought maybe a busy leafcutter ant “highway” would have dense enough traffic to leave behind ghostly ant trails, but Andy discovered that the ants are not keen to walk over solar paper laid in their path. A custom rig like the one discussed above could maybe be used–place the rig in their path, allow them time to acclimate to its presence and walk over it, then expose the paper underneath them without disturbing their work.

– Projection map visuals onto the 3D prints! These pieces were created to be static paintings, but they could also make for cool three-dimensional animated pieces. Bigger would be better for this purpose.